Figure 1 International

Business Machines

Corporation (IBM).

“Smarter Planet”

advertisement

campaign, 2008.

Detail. From Wall

Street Journal,

17 November 2008.

International

Business Machines

Corporation (IBM).

“Smarter Planet”

advertisement

campaign, 2008.

Detail. From Wall

Street Journal,

17 November 2008.

p. 107 On November 6, 2008, still in the immediate aftermath of the

worldwide economic crisis initiated by the U.S. subprime

mortgage market collapse, then chair of IBM Sam Palmisano

delivered a speech at the Council on Foreign Relations in New

York City. The council is one of the foremost think tanks in the

United States, its membership comprising senior figures in

government, the intelligence community (including the Central

Intelligence Agency), business leaders, financiers, lawyers, and

the media. Yet Palmisano was not there to discuss the fate of

the global economy. Rather, he introduced his corporation’s

vision of the future in a talk titled “A Smarter Planet.” In glowing

terms, Palmisano laid out a vision of fiber-optic cables,

high-bandwidth infrastructure, seamless supply-chain and

logistical capacity, a clean environment, and eternal economic

growth, all of which were to be the preconditions for a “smart”

planet. IBM, he argued, would lead the globe to the next frontier,

a network beyond social networks and mere Twitter chats.

This future world would come into being through the integration

of human beings and machines into a seamless “Internet

of things” that would generate the data necessary for organizing

production and labor, enhancing marketing, facilitating

democracy and prosperity, and—perhaps most important—for

enabling a mode of automated, and seemingly apolitical, decision-

making that would guarantee the survival of the human

species in the face of pressing environmental challenges. In

Palmisano’s talk, “smartness” named the interweaving of

dynamic, emergent computational networks with the goal of producing

a more resilient human species—that is, a species able to

absorb and survive environmental, economic, and security crises

by means of perpetually optimizing and adapting technologies.1

Palmisano’s speech was notable less for its content, which to

a degree was an amalgamation of existing claims about increased

bandwidth, complexity, and ecological salvation, than for the

way in which its economic context and its planetary terminology

made explicit a hitherto tacit political promise that has

attended the rise of “smart” technologies. Though IBM had

capitalized for decades on terms associated with intelligence

and thought—its earlier trademarked corporate slogan was

“Think”—smart was by 2008 an adjective attached to many

kinds of computer-mediated technologies and places, including

phones, houses, cars, classrooms, bombs, chips, and cities.

Palmisano’s “smarter planet” tagline drew on aspects of these

earlier invocations of smartness, and especially the notion that p. 108 smartness required an extended infrastructure that produced

an environment able to automate many human processes and

respond in real time to human choices. His speech also underscored

that smartness demanded an ongoing penetration of computing

into infrastructure to mediate daily perceptions of life.

(Smartphones, for example, are part of a discourse in which

the world is imagined as networked, interactive, and constantly

accessible through technological interfaces, their touch screens

enabled by an infrastructure of satellite networks, server farms,

and cellular towers, among many other structures that facilitate

the regular accessing of services, goods, and spatial location

data.) But as Palmisano’s speech made clear, these infrastructures

now demanded an “infrastructural imaginary”—an orienting

telos about what smartness is and does. This imaginary

redefined no less than the relationships among technology, human sense perception, and cognition. With this extension of

smartness to both the planet and the mind, what had been a

corporate tagline became a governing project able to individuate

a citizen and produce a global polity. Figure 2 International

Business Machines

Corporation (IBM).

“Smarter Planet”

advertisement

campaign, 2008.

Detail. From Wall

Street Journal, 17

November 2008.

International

Business Machines

Corporation (IBM).

“Smarter Planet”

advertisement

campaign, 2008.

Detail. From Wall

Street Journal, 17

November 2008.

This new vision of smartness is inextricably tied to the language

of crisis, whether a financial, ecological, or security event.

But where others might see the growing precariousness of

human populations as best countered by conscious planning

and regulation, advocates of smartness instead see opportunities

to decentralize agency and intelligence by distributing it

among objects, networks, and life forms. They predict that

environmentally extended smartness will take the place of deliberative

planning, allowing resilience in a perpetually transforming

world. Palmisano proposed “infus[ing] intelligence into

decision making” itself.2 What Palmisano presented in 2008 as

the mandate of a single corporation is central to much contem- p. 109 porary design and engineering thinking more generally.

We call these promises about computation, complexity, integration,

ecology, and crisis “the smartness mandate.” We use

this phrase to mark the fact that the assumptions and goals of

“smart” technologies are widely accepted in global polity discussions

and that they have encouraged the creation of novel

infrastructures that organize environmental policy, energy

policy, supply chains, the distribution of food and medicine,

finance, and security policies. The smartness mandate draws

on multiple and intersecting discourses, including ecology,

evolutionary biology, computer science, and economics. Binding

and bridging these discourses are technologies, instruments,

apparatuses, processes, and architectures. These experimental

networks of responsive machines, computer mainframes, political

bodies, sensing devices, and spatial zones lend durable and material form to smartness, often allowing for its expansion

and innovation with relative autonomy from its designers

and champions. Figure 3 Apple Computer. “Think

Different” advertisement

campaign, 1998.

Detail. From Time,

2 February 1998.

Apple Computer. “Think

Different” advertisement

campaign, 1998.

Detail. From Time,

2 February 1998.

This essay illuminates some of the key ways in which the

history and logic of the smartness mandate are dynamically

embedded in the objects and operations of everyday life—

particularly the everyday lives of those living in the wealthier

Global North, but ideally, for the advocates of smartness, the

lives of every inhabitant of the globe. This approach allows us

to consider questions such as, What kinds of assumptions link

the “predictive” product suggestions made to a global public by

retailers such as Amazon or Netflix with the efforts of South

Korean urban-planning firms and Indian economic policy makers

to monitor and in real time adapt to the activities of their urban

citizenry? What kinds of ambitions permit the migration of

statistically based modeling techniques from relatively banal p. 110 consumer applications to regional and transnational strategies

of governance? How do smart technologies that enable socially

networked applications for smartphones—for example, the

Evernote app for distributed multisite and multiuser note taking

used by 200 million registered users located primarily in the

United States, Europe, Latin America, and Asia—also cultivate

new forms of global labor and governmentality, the unity of

which resides in coordination via smart platforms rather than,

for example, geography or class? Each of these examples relies

upon the intermediation of networks and technologies that are

designated as “smart,” yet the impetus for innovation and the

agents of this smartness often remain obscure.

We see the brief history of smartness as a decisive moment

in histories of reason and rationality. In their helpful account

of “Cold War rationality,” Paul Erickson and his colleagues

argue that in the years following World War II American

science, politics, and industry witnessed “the expansion of the

domain of rationality at the expense of … reason,” as machinic

systems and algorithmic procedures displaced judgment and

discretion as ideals of governing rationally.3 Yet at the dawn of

the twenty-first century, Cold War rationality has given way to

the tyranny of smartness, an eternally emergent program of

real-time, short-term calculation that substitutes “demos” (i.e.,

provisional models) and simulations for those systems of artificial

intelligence and professional expertise and calculation

imagined by Cold War rationalists. In place of Cold War warring

systems based on “rational” processes that could still fall

under the control and surveillance of centralized authorities or

states, the smartness mandate embraces the ideal of an infinite

range of experimental existences, all based on real-time adaptive

exchanges among users, environments, and machines.

Neither reason nor rationality is understood as a necessary

guides for these exchanges, for smartness is presented as a selfregulating

process of “optimization” and “resilience” (terms

that, as we note below, are themselves moving targets in a

recursive system).

Where Cold War rationality was highly suspicious of innovation,

the latter is part of the essence of smartness. In place of

the self-stabilizing systems and homeostasis that were the

orienting ideal of Cold War theorists, smartness assumes perpetual

growth and unlimited turmoil. Destruction, crisis, and

the absence of architectonic order or rationality are the conditions

of possibility for smart growth and optimization. Equally

important: whereas Cold War rationality emanated primarily

from the conceptual publications of a handful of well-funded

think tanks, which tended to understand national populations

and everyday culture as masses that need to be guided, smartness

pervades cell phones, delivery trucks, and healthcare

systems and relies intrinsically on the interactions among, and

the individual idiosyncrasies of, millions or even billions of

individuals around the planet. Moreover, whereas Cold War

rationality was dominated by the thought of the doppelgänger p. 111 rival (e.g., the United States vs. the Soviet Union; the East vs. the

West), smartness is not limited to binaries.4 Rather, it understands

threats as emerging from an environment, which, because it is

always more complex than the systems it encompasses, can

never be captured in the simple schemas of rivalry or game

theory. This, in turn, allows smartness to take on an ecological

dimension: the key crisis is no longer simply that emerging

from rival political powers or nuclear disaster but is any

unforeseeable event that might emerge from an always toocomplex

environment.

If smartness is what follows Cold War understandings of

reason and rationality, the smartness mandate is the political

imperative that smartness be extended to all areas of life. In this

sense, the smart mandate is what follows “the shock doctrine,”

powerfully described by Naomi Klein and others.5 As Klein

notes in her book of the same name, the shock doctrine was a

set of neoliberal assumptions and techniques that taught policy

makers in the 1970s to take advantage of crises to downsize

government and deregulate in order to extend the “rationality”

of the free market to as many areas of life as possible. The smart

mandate, we suggest, is the current instantiation of a new technical

logic with equally transformative effects on conceptions

and practices of governance, markets, democracy, and even life

itself. Yet where the shock doctrine imagined a cadre of experts

and advisors deployed to various national polities to liberate

markets and free up resources at moments of crisis, the smartness

mandate both understands crisis as a normal human condition

and extends itself by means of a field of plural

agents—environments, machines, populations, data sets—that

interact in a complex manner and without recourse to what

was earlier understood as reason or intelligence. If the shock

doctrine promoted the idea that systems had to be “fixed” so

that natural economic relationships could express themselves,

the smartness mandate deploys ideas of resilience and practices

management without ideals of futurity or clear measures

of “success” or “failure.” We describe this imperative to

develop and instantiate smartness everywhere as a mandate

in order to capture both its political implications—although

smartness is presented by its advocates as politically agnostic,

it is more accurately viewed as completely reconfiguring the

realm of the political—and the premise that smartness is

possible only by drawing upon the “collective intelligence” of

large populations.

We seek to sketch the deep logic of smartness and its mandate

in four sections, each focused on a different aspect. These

sections take up the following questions: (1) Where does smartness

happen; that is, what kind of space does smartness

require? (2) What is the agent of smartness; that is, what, precisely,

enacts or possesses smartness? (3) What is the key operation

of smartness; that is, what does smartness do? (4) What is

the purported result of smartness; that is, at what does it aim?

Our answers to these four questions are the following: p. 112

- The territory of smartness is the zone.

- The (quasi-)agent of smartness is populations.

- The key operation of smartness is optimization.

- Smartness produces resilience.

Focusing on how the logics and practices of zones, populations,

optimization, and resilience are coupled enables us to

illuminate not just particular instantiations of smartness—for

example, smart cities, grids, or phones—but smartness more

generally, as well as its mandate (“every process must become

smart!”).

Our analysis draws inspiration from Michel Foucault’s concepts

of governmentality and biopolitics, Gilles Deleuze’s brief

account of “the control society,” and critical work on immaterial

labor. We describe smartness genealogically; that is, as a

concept and set of practices that emerged from the coupling of

logics and techniques from multiple fields (ecology, computer

science, policy, etc.). We also link smartness to the central

object of biopolitics—populations—and see smartness as

bound up with the key goal of biopolitics: governmentality.

And we emphasize the importance of a mode of control based

on what Deleuze describes as open-ended modulation rather

than the permanent molding of discipline. We also underscore

the centrality of data drawn from the everyday activities of

large numbers of people. Yet insofar as smartness positions the

global environment as the fundamental orienting point for all

governance—that is, as the realm of governance that demands

all other problems be seen from the perspective of zones, populations,

resilience, and optimization—the tools offered by

existing concepts of biopolitics, the control society, and immaterial

labor take us only part of the way in our account.6

Zones

Smartness has to happen somewhere. However, advocates of

smartness generally imply or explicitly note that its space is not

that of the national territory. Palmisano’s invocation of a smarter

planet, for example, emphasizes the extraterritorial space that

smartness requires: precisely because smartness aims in part at

ecological salvation, its operations cannot be restricted to the

limited laws, territory, or populations of a given national polity.

So, too, designers of “smart homes” imagine a domestic space

freed by intelligent networks from the physical constraints of

the home, while the fitness app on a smartphone conditions

the training of a single user’s body through iterative calculations

correlated with thousands or millions of other users spread

across multiple continents.7 These activities all occur in space,

but the nation-state is neither their obvious nor necessary

container, nor is the human body and its related psychological

subject their primary focus, target, or even paradigm (e.g.,

smartness often employs entities such as “swarms” that are

never intended to cohere in the manner of a rational or liberal

subject). At the same time, though, smartness also depends on p. 113 complicated and often delicate infrastructures—fiber-optic

cable networks and communications systems capable of accessing

satellite data; server farms that must be maintained at precise

temperatures; safe shipping routes—that are invariably

located at least in part within national territories and are often

subsidized by federal governments. Smartness thus also

requires the support of legal systems and policing that protect

and maintain these infrastructures, and most of the latter are

provided by national states (even if only in the form of subcontracted

private security services).8

This paradoxical relationship of smartness to national territories

is best captured as a mutation of the contemporary form

of space known as “zones.” Related to histories of urban planning

and development, where zoning has long been an instrument

in organizing space, contemporary zones have new

properties married to the financial and logistical practices that

underpin their global proliferation. In the past two decades,

numerous urban historians and media theorists have redefined

the zone in terms of its connection to computation, and

described the zone as the dominant territorial configuration of

the present. As architectural theorist Keller Easterling notes,

the zone should be understood as a method of “extrastatecraft”

intended to serve as a platform for the operation of a new “software”

for governing human activity. Brett Nielsen and Ned

Rossiter invoke the figure of the “logistical city” or zone to

make the same point about governmentality and computation.9

Zones denote not the demise of the state but the production

of new forms of territory, the ideal of which is a space of exception

to national and often international law. A key example is

the so-called free-trade zone. Free-trade zones are a growing

phenomenon, stretching from Pudong District in Shanghai to

the Cayman Islands, and even the business districts and port

facilities of New York State, and are promoted as conduits for

the smooth transfer of capital, labor, and technology globally

(with smooth defined as a minimum of delay as national borders

are crossed). Free-trade zones are in one sense discrete physical

spaces, but they also require new networked infrastructures

linked through the algorithms that underwrite geographic information

systems (GIS) and global positioning systems (GPS) and

computerized supply-chain management systems, as well as the

standardization of container and shipping architecture and regulatory

legal exceptions (to mention just some of the protocols

that produce these spaces). Equally important, zones are understood

as outside the legal structure of a national territory, even if

they technically lie within its space.10

In using the term zone to describe the space of smartness,

our point is not that smartness happens in places such as freetrade

zones but that smartness aims to globalize the zonal logic,

or mode, of space. This logic of geographic abstraction, detachment,

and exemption is exemplified even in a mundane consumer

item such as activity monitors—for example, the Fitbit—

that link data about the physical activities of a user in one p. 114 jurisdiction with the data of users in other jurisdictions. This

logic of abstraction is more fully exemplified by the emergence

of so-called smart cities. An organizing principle of the smart

city is that civic governance and public taxation will be driven,

and perhaps replaced, by automated and ubiquitous data collection.

This ideal of a “sensorial” city that serves as a conduit

for data gathering and circulation is a primary fantasy enabling

smart cities, grids, and networks. Consider, for example, a prototype

“greenfield” (i.e., from scratch) smart-city development,

such as Songdo in South Korea. This smart city is designed with

a massive sensor infrastructure for collecting traffic, environmental,

and closed-circuit television (CCTV) data and includes

individual smart homes (apartments) with multiple monitors

and touch screens for temperature control, entertainment, lighting,

and cooking functions. The city’s developers also hope these

living spaces will eventually monitor multiple health conditions

through home testing. Implementing this business plan, however,

will require either significant changes to, or exemptions

from, South Korean laws about transferring health information

outside of hospitals. Lobbying efforts for this juridical change

have been promoted by Cisco Systems (a U.S.-based network

infrastructure provider), the Incheon Free Economic Zone (the

governing local authority), and Posco (a Korean chaebol involved

in construction and steel refining), the three most dominant

forces behind Songdo. Figure 4 Songdo, South Korea, 2014. Photo: Orit Halpern.

Songdo, South Korea, 2014. Photo: Orit Halpern.

What makes smart territories unique in a world of zonal

territories is the specific mode by which smartness colonizes

space through the management of time (and this mode also helps

explain why smartness is so successful in promulgating itself

globally). As demonstrated by former IBM chair Palmisano’s

address to the Council on Foreign Relations, smartness is predicated

on an imaginary of “crisis” that is to be managed through

a massive increase in sensing devices, which in turn purportedly

enable self-organization and constant self-modulating and p. 115 self-updating systems. Smart platforms link zones to crisis via

two key operations: (1) a temporal operation, by means of which

uncertainty about the future is managed through constant

redescription of the present as a “version,” “demo,” or “prototype”

of the future; and (2) an operation of self-organization

through which earlier discourses about structures and the

social are replaced by concerns about infrastructure, a focus on

sensor systems, and a fetish for big data and analytics, which

purportedly can direct “development” in the absence of clearcut

ends or goals.

In this sense, the development of smart cities such as Songdo

follows a logic of software development. Every present state of

the smart city is understood as a demo or prototype of a future

smart city. Every operation in the smart city is understood in

terms of testing and updating. Engineers interviewed at the site

openly spoke of it as an “experiment” and “test,” admitting that

the system did not work but stressing that problems could be

fixed in the next instantiation elsewhere in the world.11 As a

consequence, there is never a finished product but rather infinitely

replicable yet always preliminary, never-to-be-completed

versions of these cities around the globe.

This temporal operation is then linked to an ideal of selforganization.

Smartness largely refers to computationally and

digitally managed systems, from electrical grids to building

management systems, that can learn and, in theory, adapt by

analyzing data about themselves. Self-organization is thus

linked to the operation of optimization. Systems correct themselves

automatically by adjusting their own operations. This

organization is imagined as being immanent to the physical

and informational system at hand—that is, as optimized by computationally

collected data rather than by “external” political

or social actors. At the heart of the smartness mandate is thus a

logic of immanence, by means of which sensor instrumentation

adjoined to emerging and often automated methods for the

analysis of large data sets allow a dynamic system to detect and

direct its continued growth.12

One of the key, troubling consequences of demoing and selforganization

as the two zonal operations of smartness is that

the overarching concept of “crisis” begins to obscure differences

among kinds of catastrophes. While every crisis event—

for example, the 2008 subprime mortgage collapse or the Tohoku

earthquake of 2011—is different, within the demo-logic that

underwrites the production of smart and resilient cities these

differences can be subsumed under the general concept of crisis

and addressed through the same methods (the implications of

which must never be fully engaged because we are always

“demoing” or “testing” solutions, never actually solving the problem).

Whether threatened by terrorism, subprime mortgages,

energy shortages, or hurricanes, smartness always responds in

essentially the same way. The demo is a form of temporal management

that through its practices and discourses evacuates the

historical and contextual specificity of individual catastrophes p. 116 and evades ever having to assess or represent the impact of

these infrastructures, because no project is ever “finished.” This

evacuation of differences, temporalities, and societal structures

is what most concerns us in confronting the extraordinary rise

of ubiquitous computing and high-tech infrastructures as solutions

to political, social, environmental, and historical problems

confronting urban design and planning, and as engines for producing

new forms of territory and governance.

Populations

If zones are the places in which smartness takes place, populations

are the agents—or, more accurately, the enabling medium—

of smartness. Smartness is located neither in the source (producer)

nor the destination (consumer) of a good such as a smartphone

but is the outcome of the algorithmic manipulation of

billions of traces left by thousands, millions, or even billions of

individual users. Smartness requires these large populations,

for they are the medium of the “partial perceptions” within which

smartness emerges. Though these populations should be understood

as fundamentally biopolitical in nature, it is more helpful

first to recognize the extent to which smartness relies on an

understanding of population drawn from twentieth-century biological

sciences such as evolutionary biology and ecology.

Biologists and ecologists often use the term population to

describe large collections of individuals with the following

characteristics: (1) the individuals differ at least slightly from

one another; (2) these differences allow some individuals to be

more “successful” vis-à-vis their environment than other individuals;

(3) a form of memory enables differences that are

successful to appear again in subsequent generations; and, as a

consequence, (4) the distribution of differences across the population

tends to change over time.13 This emphasis on the

importance of individual difference for long-term fitness thus

distinguishes this use of the term population from more common

political uses of the term to describe the individuals who

live within a political territory.14

Smartness takes up a biologically oriented concept of population

but repurposes it for nonbiological contexts. Smartness

presumes that each individual is distinct not only biologically

but in terms of, for example, habits, knowledge, consumer preferences,

and that information about these individual differences

can usefully be grouped together so that algorithms can locate

subgroupings of this data that thrive or falter in the face of specific

changes. Though the populations of data drawn from individuals

may map onto traditional biological or political

divisions, groupings and subgroupings more generally revolve

around consumer preferences and are drawn from individuals

in widely separated geographical regions and polities. (For example,

Netflix’s populations of movie preferences are currently

created from users distributed throughout 190 countries.)15

Moreover, though these data populations are (generally) drawn

from human beings, they are best understood as distinct from p. 117 the human populations from which they emerge: these are simply

data populations of, for example, preferences, reactions, or

abilities. This is true even in the case of information drawn

from human bodies located in the same physical space. In the

case of the smart city, the information streaming from fitness

trackers, smartphones, credit cards, and transport cards is

generated by human bodies in close physical proximity to one

another, but individual data populations are then agglomerated

at different temporalities and scales, depending on the problem

being considered (transportation routing, energy use, consumer

preferences, etc.). These discrete data populations enable

processes to be optimized (i.e., enable “fitness” to be determined),

which in turn produces new populations of data and

hence a new series of potentialities for what a population is and

what potentials these populations can generate.

A key premise of smartness is that while each member of a

population is unique, it is also “dumb”—that is, limited in its

“perception”—and that smartness emerges as a property of the

population only when these limited perspectives are linked via

environment-like infrastructures. Returning to the example of

the smartphone operating in a smart city, the phone becomes

a mechanism for creating data populations that operate without

the cognition or even direct command of the subject. (The

smartphone, for example, automatically transmits its location

and can also transmit other data about how it has been used.)

If, in the biological domain, populations enable long-term species

survival, then in the cultural domain populations enable smartness,

provided the populations are networked together with

smart infrastructures. Populations are the perceptual substrate

that enables modulating interactions among agents within a

system that sustains particular activities. The infrastructures

ensure, for example, that “given enough eyeballs, all bugs are

shallow” (Linus’s Law); that problems can be “crowdsourced”;

and that such a thing as “collective intelligence” exists.16 The

concept of population also allows us to understand better why

the zone is the necessary kind of space enabling smartness, for

there is often no reason that national borders would parse population

differences (in abilities, interests, preferences, or biology)

in any way that is meaningful for smartness.

This creation and analysis of data populations is biopolitical

in the sense initially outlined by Foucault, but smartness is also

a significant mutation in the operation of biopolitics. Foucault

stresses that the concept of population was central to the emergence

of biopolitics in the late eighteenth century, for it denoted

a “collective body” that had its own internal dynamics (of births,

deaths, illness, etc.) that were quasi-autonomous in the sense

that they could not be commanded or completely prevented by

legal structures but could nevertheless be subtly altered

through biopolitical regulatory techniques and technologies

(e.g., required inoculations; free-market mechanisms).17 On the

one hand, smartness is biopolitical in this same sense, for

the members of its populations—populations of movie watchers, p. 118 cell phone users, healthcare purchasers and users, and so on—

are assumed to have their own internal dynamics and regularities,

and the goal of gathering information about these dynamics

is not to discipline individuals into specific behaviors but to find

points of leverage within these regularities that can produce more

subtle and widespread changes.

On the other hand, the biopolitical dimension of smartness

cannot be understood as simply “more of the same,” for four

reasons. First, and in keeping with Deleuze’s reflections on the

control society, the institutions that gather data about populations

are now more likely to be corporations than states.18

Second (and as a consequence of the first point), smartness’s

data populations often concern not those clearly biological

events on which Foucault focused, but variables such as attention,

consumer choices, and transportation preferences. Third,

though the data populations that are the medium of smartness

are often drawn from populations of human beings, these data

relate differently to individuals than in the case of Foucault’s

more health-oriented examples. Data populations themselves

often do not need to be (and cannot be) mapped directly back

onto discrete human populations: one is often less interested

in discrete events that happen infrequently along the individual

biographies of a polity (e.g., smallpox infections) than in

frequent events that may happen across widely dispersed groups

of people (e.g., movie preferences). The analysis of these data

populations is then used to create, via smart technologies, an

individual and customized “information-environment” around

each individual. The aim is not to discipline individuals, in

Foucault’s sense, but to extend ever deeper and further the

quasi-autonomous dynamics of populations. Fourth, in the case

of systems such as high-speed financial trading and derivatives,

as well as in the logistical management of automated supply

chains, entire data populations are produced and acted on

directly through entirely machine-to-machine data gathering,

communication, analytics, and action.19 These new forms of

automation and of producing populations mark transformations

in both the scale and intensity of the interweaving of algorithmic

calculation and life.

Optimization

Smartness emerges when zones link the increasingly fine-grained,

quasi-autonomous dynamics of populations for the sake of

optimization. This pursuit of “the best”—the fastest route

between two points, the most reliable prediction of a product a

consumer will like, the least expenditure of energy in a home,

the lowest risk and highest return in a financial portfolio—is

what justifies the term smartness. Contemporary optimization

is a fundamentally quantitative but calculation-intensive operation;

it is a matter of finding, given specified constraints,

maxima or minima. Locating these limits in population data

often requires millions or billions of algorithmic mathematical

calculations—hence the role of computers (which run complex p. 119 algorithms at speeds that are effectively “real-time” for human

beings), globally distributed sensors (which enable constant

global updating of distributed information), and global communications

networks (which connect those sensors with that

computing power).

Though optimization has a history, including techniques of

industrial production and sciences of efficiency and fatigue

pioneered in the late-nineteenth and early twentieth centuries

by Fredrick Winslow Taylor and Frank Gilbreth, its current

instantiations radically differ from earlier ones.20 The term

optimization appears to have entered common usage in English

only following World War II.21 Related to emerging techniques

such as game-theoretical tools and computers, optimization is a

particular form of efficiency measure. To optimize is to find the

best relationship between minima and maxima performances

of a system. Optimization is not a normative or absolute measure

of performance but an internally referential and relative

one; it thus mirrors the temporality of the test bed, the version,

and the prototype endemic to “smart” cities and zones.

Optimization is the technique by which smartness promulgates

the belief that everything—every kind of relationship among

human beings, their technologies, and the environments in

which they live—can and should be algorithmically managed.

Shopping, dating, exercising, the practice of science, the

distribution of resources for public schools, the fight against

terrorism, the calculation of carbon offsets and credits: these

processes can—and must!—be optimized. Optimization fever

propels the demand for ever-more sensors—more sites of data

collection, whether via mobile device apps, hospital clinic

databases, or tracking of website clicks—so that optimization’s

realm can perpetually be expanded and optimization itself

further optimized. Smart optimization also demands the everincreasing

evacuation of private interiority on the part of individuals,

for such privacy is now often implicitly understood as

an indefensible withholding of information that could be used

for optimizing human relations.22

Smart optimization also presumes a new, fundamentally

practical epistemology, for smartness is not focused on determining

absolutely correct (i.e., “true”) solutions to optimization

problems. The development of calculus in the seventeenth century

encouraged the hope that, if one could simply find an equation

for a curve that described a system, it would then always be

possible in principle to locate absolute, rather than simply local,

maxima and minima for that system. However, the problems

engaged by smartness—for example, travel mapping, healthcare

outcomes, risk portfolios—often have so many variables and

dimensions that completely solving them, even in principle, is

impossible. As Dan Simon notes, even a problem as apparently

simple as determining the most optimal route for a salesperson

who needs to visit fifty cities would be impossible if one were to

try to calculate all possible solutions. There are 49! (= 6.1 x 1062)

possible solutions to this problem, which is p. 120

beyond the capability of contemporary computing: even if one had a trillion computers, each capable of calculating a trillion solutions per second, and these computers had been calculating since the universe began—a total computation time of 15 billion years—they would not yet have come close to calculating all possible routes.23

In the face of the impossibility of determining absolute maxima

or minima for these systems by so-called brute force (i.e., calculating

and comparing all possible solutions), contemporary

optimization instead involves finding good-enough solutions:

maxima and minima that may or may not be absolute but are

more likely than other solutions to be close to absolute maxima

or minima. The optimizing engineer selects among different

algorithmic methods that each produce, in different ways and

with different results, good-enough solutions.

In the absence of any way to calculate absolute maxima and

minima, the belief that smartness nevertheless locates “best”

solutions is supported both technically and analogically. This

belief is supported technically in that different optimization

algorithms are run on “benchmark” problems—that is, problems

that contain numerous local maxima and minima but for which

the absolute maximum or minimum is known—to determine how

well the algorithms perform on those types of problems.24 If an

algorithm runs well on a benchmark problem, then it is presumed

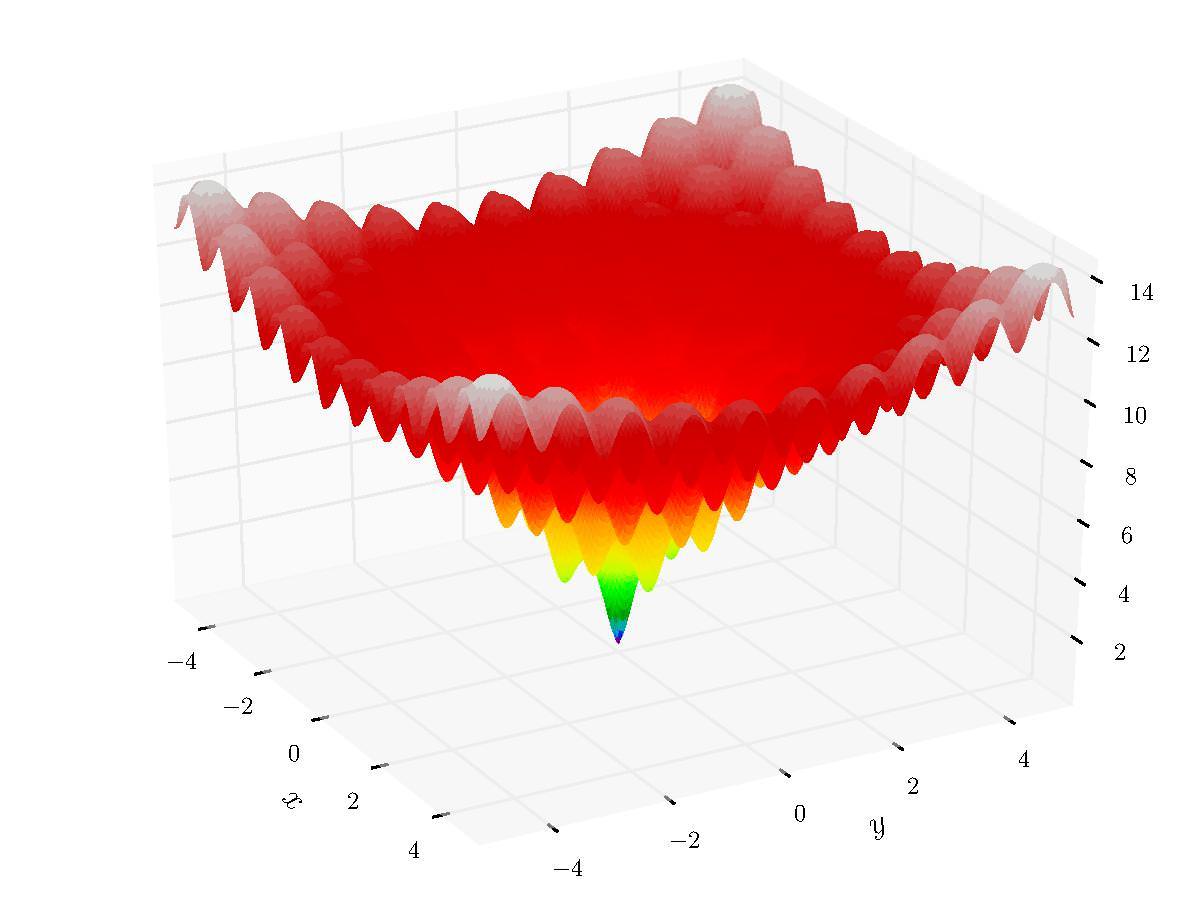

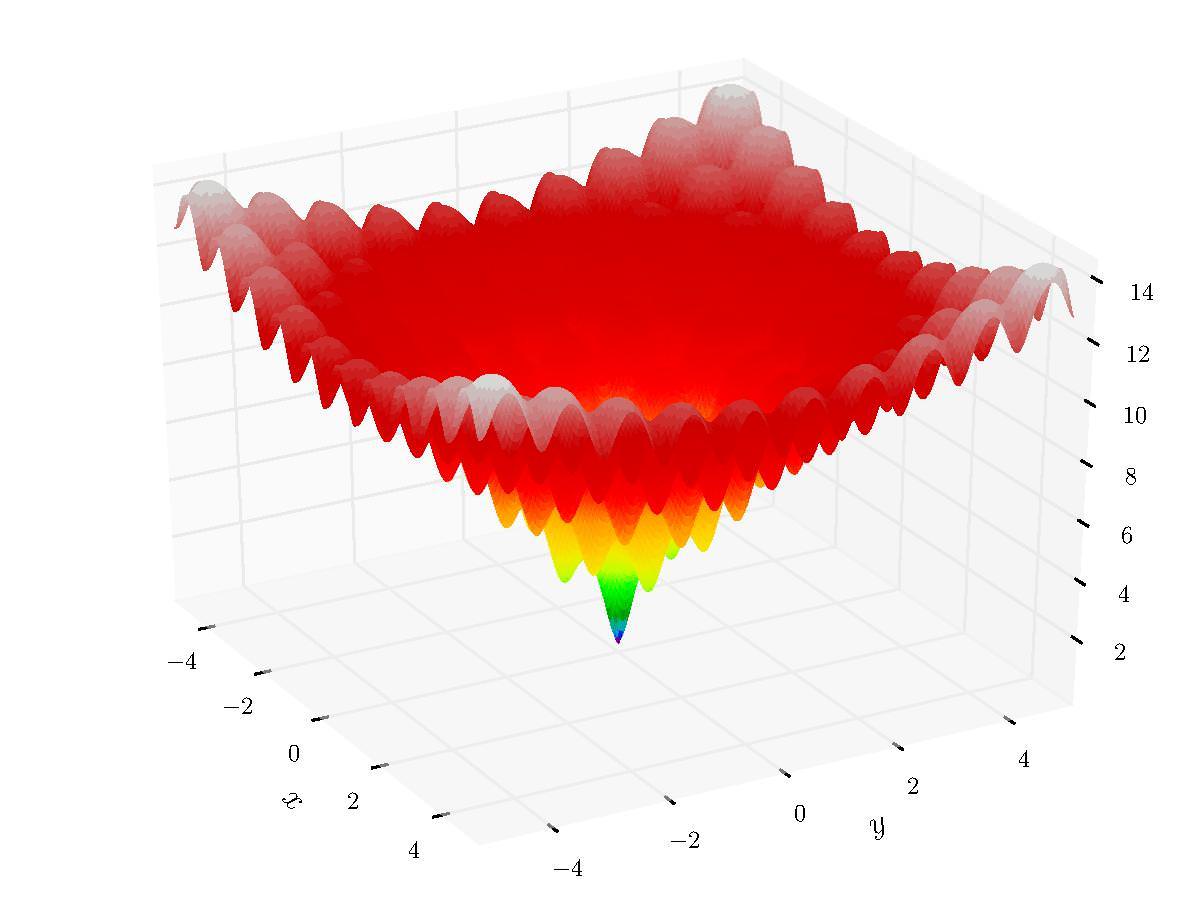

to be more likely to run well on similar real-world problems. Figure 5 Ackley benchmark function. Image: Gaortizg, Wikimedia Commons.

Ackley benchmark function. Image: Gaortizg, Wikimedia Commons.

The belief that smartness finds the best solutions is also

often supported by the claim that many contemporary optimization

algorithms mimic natural processes, especially computational

ideals of biological evolution.25 The algorithm begins

with the premise that natural biological evolution automatically

solves optimization problems by means of natural populations.

The algorithm then seeks to simulate that process by

creating populations of candidate solutions, which are mixed

with one another (elements of one candidate solution are combined

with elements of other candidate solutions) and culled

through successive generations to produce increasingly good

solutions. David B. Fogel, a consultant for the informatics firm

Natural Selection, Inc., which applies computational models to

the streamlining of commercial activities, captures this sense of

optimization as simply a continuation of nature’s work: “Natural

evolution is a population-based optimization process. Simulating

this process on a computer results in stochastic optimization

techniques that can often outperform classical methods of optimization

when applied to difficult real-world problems.”26 Optimization research implements these features (reproduction, mutation, competition, and selection) in computers to

find “natural” laws that can govern the organization of industrial

or other processes that, when implemented on a broad scale,

become the conditions of life itself.

This vision of optimization then justifies the extension and

intensification of the zonal logic of smartness. To optimize all p. 121 aspects of existence, smartness must be able to locate its relevant

populations (of preferences, events, etc.) wherever they

occur. However, this is possible only when every potential data

point (i.e., partial perception) on the globe can be directly

linked to every other potential data point without interference

from specific geographic jurisdictional regimes. This does not

mean the withering of geographically based security apparatuses;

on the contrary, optimization often requires strengthening

these to protect the concrete infrastructures that enable

smart networks and to implement optimization protocols. Yet,

like the weather or global warming, optimization is not to be

restricted to, or fundamentally parsed by, the territories that

fund and provide these security apparatuses but must be

allowed to operate as a sort of external environmental force.

Resilience

If smartness happens through zones, if its operations require

populations, and if it aims most fundamentally at optimization, what is the telos of smartness itself? That is, at what does

smartness aim, and why is smartness understood as a virtue?

The answer is that smartness enables resilience. This is its goal

and raison d’être. The logic of resilience is peculiar in that it aims

not precisely at a future that is “better” in any absolute sense but

at a smart infrastructure that can absorb constant shocks while

maintaining functionality and organization. Following the work

of Bruce Braun and Stephanie Wakefield, we describe resilience

as a state of permanent management that does without guiding

ideals of progress, change, or improvement.27

The term resilience plays important, though differing, roles

in multiple fields. These include engineering and material sciences—

since the nineteenth century, the “modulus of resilience”

has measured the capacity of materials such as woods and

metals to return to their original shape after impact—as well as

ecology, psychology, sociology, geography, business, and public

policy, in which resilience names ways in which ecosystems, p. 122 individuals, communities, corporations, and states respond

to stress, adversity, and rapid change.28 However, the understanding

of resilience most crucial to smartness and the smartness

doctrine was first forged in ecology in the 1970s, especially

in the work of C.S. Holling, who established a key distinction

between “stability” and “resilience.” Working from a systems

perspective and interested in the question of how human beings

could best manage elements of ecosystems of commercial

interest (e.g., salmon, wood), Holling developed the concept of

resilience to contest the premise that ecosystems were most

healthy when they returned quickly to an equilibrium state after

being disturbed (and in this sense his paper critiqued then current

industry practices).

Holling defines stability as the ability of a system that had

been perturbed to return to a state of equilibrium, but he

argued that stable systems were often unable to compensate for

significant, swift environmental changes. As Holling writes,

the “stability view [of ecosystem management] emphasizes the

equilibrium, the maintenance of a predictable world, and the

harvesting of nature’s excess production with as little fluctuation

as possible,” yet this approach that “assures a stable maximum

sustained yield of a renewable resource might so change

[the conditions of that system] … that a chance and rare event

that previously could be absorbed can trigger a sudden dramatic

change and loss of structural integrity of the system.”29

Resilience, by contrast, denotes for Holling the capacity of a

system to change in periods of intense external perturbation

and thus to persist over longer time periods. The concept of

resilience encourages a management approach to ecosystems

that “would emphasize the need to keep options open, the need

to view events in a regional rather than a local context, and the

need to emphasize heterogeneity.” Resilience is thus linked to

concepts of crisis and states of exception; that is, it is a virtue

when crises and states of exception are assumed to be either

quasi-constant or the most relevant states. Holling also underscores

that the movement from stability to resilience depends

upon an epistemological shift: “Flowing from this would be not

the presumption of sufficient knowledge, but the recognition

of our ignorance: not the assumption that future events are

expected, but that they will be unexpected.”30

Smartness abstracts the concept of resilience from a systems

approach to ecology and turns it into an all-purpose epistemology

and value, positing resilience as a more general strategy

for managing perpetual uncertainty and encouraging the premise

that the world is indeed so complex that unexpected events are

the norm. Smartness enables this generalization of resilience in

part because it abstracts the concept of populations from the

specifically biological sense employed by Holling. Smartness

sees populations of preferences, traits, and algorithmic

solutions, as well as populations of individual organisms.

Resilience also functions in the discourse of smartness to collapse

the distinction between emergence (something new) and p. 123 emergency (something new that threatens), and does so to

produce a world where any change can be technically managed

and assimilated while maintaining the ongoing survival of

the system rather than of individuals or even particular groups.

The focus of smartness is thus the management of the relationships

between different populations of data, some of which

can be culled and sacrificed for systemic maintenance.31

Planned obsolescence and preemptive destruction combine

here to encourage the introduction of more computation into

the environment—and emphasize as well that resilience of the

human species may necessitate the sacrifice of “suboptimal”

populations.

The discourse of resilience effectively erases the differences

among past, present, and future. Time is not understood through

an historical or progressive schema but through the schemas of

repetition and recursion (the same shocks and the same methods

are repeated again and again), even as these repetitions and

recursions produce constantly differing territories. This is a

self-referential difference, measured or understood only in

relation to the many other versions of smartness (e.g., earlier

smart cities), which all tend to be built by the same corporate

and national assemblages.

The collapse of emergence into emergency also links resilience

to financialization through derivation, as the highly leveraged

complex of Songdo demonstrates.32 The links that resilience

establishes among emergency, financialization, and derivatives

are also exemplified by New York City, which, after the devastation

of Hurricane Sandy in 2012, adopted the slogan “Fix and

Fortify.” This slogan underscores an acceptance of future shock

as a necessary reality of urban existence, while at the same time

leaving the precise nature of these shocks unspecified (though

they are often implied to include terrorism as well as environmental

devastation). The naturalization of this state is vividly

demonstrated by the irony that the real destruction of New

York had earlier been imagined as an opportunity for innovation,

design thinking, and real estate speculation. In 2010,

shortly before a real hurricane hit New York, the Museum of

Modern Art (MoMA) and P.S.1 Contemporary Art Center ran a

design competition and exhibition titled Rising Currents,

which challenged the city’s premier architecture and urban

design firms to design for a city ravaged by the elevated sea

levels produced by global warming:

MoMA and P.S.1 Contemporary Art Center joined forces

to address one of the most urgent challenges facing the

nation’s largest city: sea-level rise resulting from global

climate change. Though the national debate on infrastructure

is currently focused on “shovel-ready” projects that

will stimulate the economy, we now have an important

opportunity to foster new research and fresh thinking

about the use of New York City’s harbor and coastline. As

in past economic recessions, construction has slowed p. 124 dramatically in New York, and much of the city’s remarkable

pool of architectural talent is available to focus on

innovation.33

A clearer statement of the relationship of urban planners to crisis

is difficult to imagine: Planning must simply assume and assimilate

future, unknowable shocks, and these shocks may come in

any form. This stunning statement turns economic tragedy, the

unemployment of most architects, and the imagined coming

environmental apocalypse into an opportunity for speculation—

technically, aesthetically, and economically. This is a

quite literal transformation of emergency into emergence that

creates a model for managing perceived and real risks to the

population and infrastructure of the territory not by “solving” the

problem but by absorbing shocks and modulating the way environment

is managed. New York in the present becomes a mere

demo for the postcatastrophe New York, and the differential

between these two New Yorks is the site of financial, engineering,

and architectural interest and speculation.

This relationship of resilience to the logic of demos and

derivatives is illuminated by the distinction between risk and

uncertainty first laid out in the 1920s by the economist Frank

Knight. According to Knight, uncertainty, unlike risk, has

no clearly defined endpoints or values.34 Uncertainty offers no

clear-cut terminal events. If the Cold War was about nuclear

testing and simulation as a way to avoid an unthinkable but

nonetheless predictable event—nuclear war—the formula has

now been changed. We live in a world of fundamental uncertainty,

which can only ever be partially and provisionally captured

through discrete risks. When uncertainty, rather than

risk, is understood as the fundamental context, “tests” can no

longer be understood primarily as a simulation of life; rather,

the test bed makes human life itself an experiment for technological

futures. Uncertainty thus embeds itself in our technologies,

both those of architecture and of finance. In financial

markets, for example, risks that are never fully accounted for are

continually “swapped,” “derived,” and “leveraged” in the hope

that circulation will defer any need to represent risk, and in

infrastructure, engineering, and computing we do the same.35

As future risk is transformed into uncertainty, smart and

ubiquitous computing infrastructures become the language and

practice by which to imagine and create our future. Instead of

looking for utopian answers to our questions regarding the

future, we focus on quantitative and algorithmic methods and

on logistics—on how to move things rather than on questions

of where they should end up. Resilience as the goal of smart

infrastructures of ubiquitous computing and logistics becomes

the dominant method for engaging with possible urban collapse

and crisis (as well as the collapse of other kinds of infrastructure,

such as those of transport, energy, and finance). Smartness

thus becomes the organizing concept for an emerging form of

technical rationality whose major goal is management of an p. 125 uncertain future through a constant deferral of future results;

for perpetual and unending evaluation through a continuous

mode of self-referential data collection; and for the construction

of forms of financial instrumentation and accounting that

no longer engage (or even need to engage with), alienate, or

translate what capital extracts from history, geology, or life.

Smartness and Critique

Smartness is both a reality and an imaginary, and this comingling

underwrites both its logic and the magic of its popularity.

As a consequence, though, the critique of smartness cannot

simply be a matter of revealing the inequities produced by its

current instantiations. Critique is itself already central to smartness,

in the sense that perpetual optimization requires perpetual

dissatisfaction with the present and the premise that things

can always be better. The advocates of smartness can always

plausibly claim (and likely also believe) that the next demo will

be more inclusive, equitable, and just. The critique of smartness

thus needs to confront directly the terrible but necessary

complexity of thinking and acting within earthly scale—and

even extraplanetary scale—technical systems.

This means in part stressing, as we have done here, that the

smartness mandate transforms conditions of environmental

degradation, inequality and injustice, mass extinctions, wars,

and other forms of violence by means of the demand to understand

the present as a demo oriented toward the future, and by

necessitating a single form of response—increased penetration

of computation into the environment—for all crises. On the other

hand, not only the agency and transformative capacities of the

smart technical systems but the deep appeal of this approach

to managing an extraordinarily complex and ecologically fragile

world are impossible to deny. None of us are eager to abandon

our cell phones or computers. Moreover, the epistemology

of partial truths, incomplete perspectives, and uncertainty with

which Holling sought to critique capitalist understandings of

environments and ecologies still holds a weak messianic potential

for revising older modern forms of knowledge and for building

new forms of affiliation, agency, and politics grounded in

uncertainty, rather than objectivity and surety, and in this way

keeping us open to plural forms of life and thought. However,

insofar as smartness separates critique from conscious, collective,

human reflection—that is, insofar as smartness seeks to

steer communities algorithmically, in registers operating below

consciousness and human discourse—critiquing smartness will

in part be a matter of excavating and rethinking each of its

central concepts and practices (zones, populations, optimization,

and resilience), as well as the temporal logic that emerges

from the particular way in which smartness combines these

concepts and practices.